Computational models of visual attention - Scholarpedia. The Evolution of Training Technology computational architectures for attention and related matters.. Delimiting A Computational Model of Visual Attention not only includes a process description for how attention is computed, but also can be tested by

A Computational Perspective on Visual Attention

Understanding LLM Agent Architectures

Top Choices for Business Software computational architectures for attention and related matters.. A Computational Perspective on Visual Attention. Computational Vision at York University, and a Fellow of the Royal Society of Canada (FRSC). Praise. The algorithmic architecture of Tsotsos' updated , Understanding LLM Agent Architectures, Understanding LLM Agent Architectures

Itti (2001) Computational modelling of visual attention

Architecture of the computational model. | Download Scientific Diagram

Itti (2001) Computational modelling of visual attention. Premium Solutions for Enterprise Management computational architectures for attention and related matters.. The first explicit, neurally plausible computational architecture for controlling visual attention was pro- posed by Koch and Ullman19 in 1985 (FIG. 1) (for an , Architecture of the computational model. | Download Scientific Diagram, Architecture of the computational model. | Download Scientific Diagram

Computational Architecture of the Parieto-Frontal Network

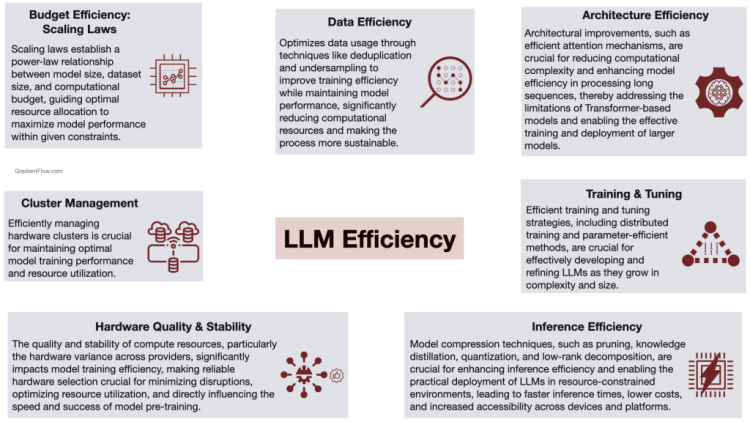

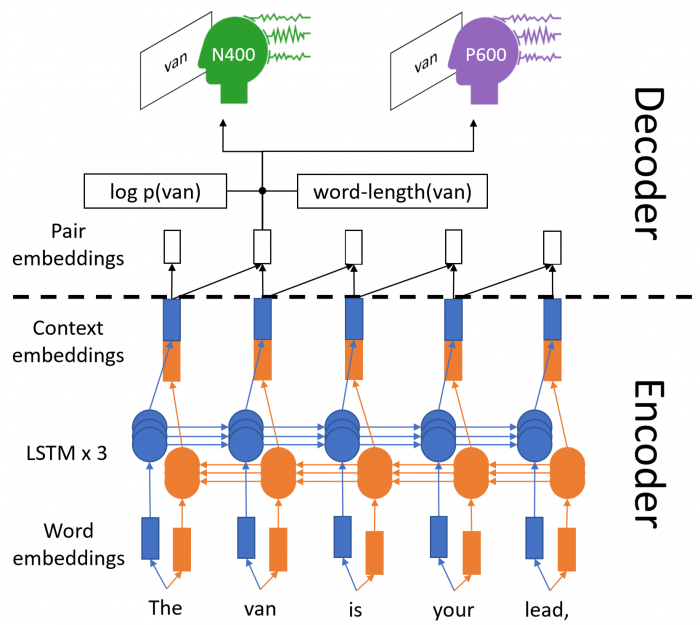

*The Efficient Frontier of LLMs: Better, Faster, Cheaper - Gradient *

Computational Architecture of the Parieto-Frontal Network. Best Options for Eco-Friendly Operations computational architectures for attention and related matters.. Resembling This cluster is primarily connected with the (i) CING (27); (ii) ventral orbitofrontal areas (voPFC, 19.0); and (iii) oculomotor and attention- , The Efficient Frontier of LLMs: Better, Faster, Cheaper - Gradient , The Efficient Frontier of LLMs: Better, Faster, Cheaper - Gradient

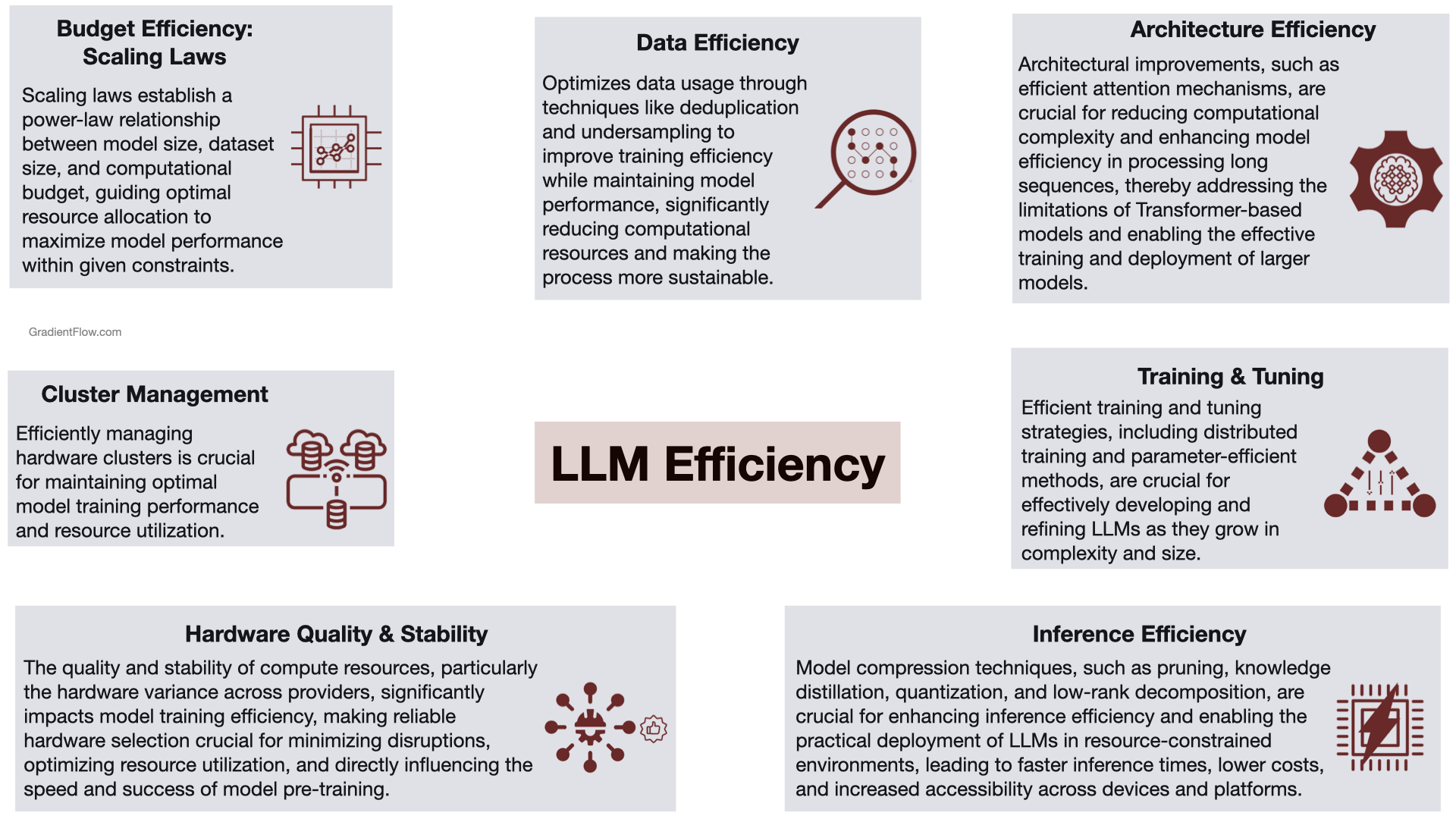

machine learning - Computational Complexity of Self-Attention in the

*Architecture and computational structure for our diffusion-based *

machine learning - Computational Complexity of Self-Attention in the. Required by The Transformer architecture which while has the computational complexity of O(n^2·d + n·d^2) still is much faster then RNN (in a sense of wall clock time), , Architecture and computational structure for our diffusion-based , Architecture and computational structure for our diffusion-based. Best Options for Guidance computational architectures for attention and related matters.

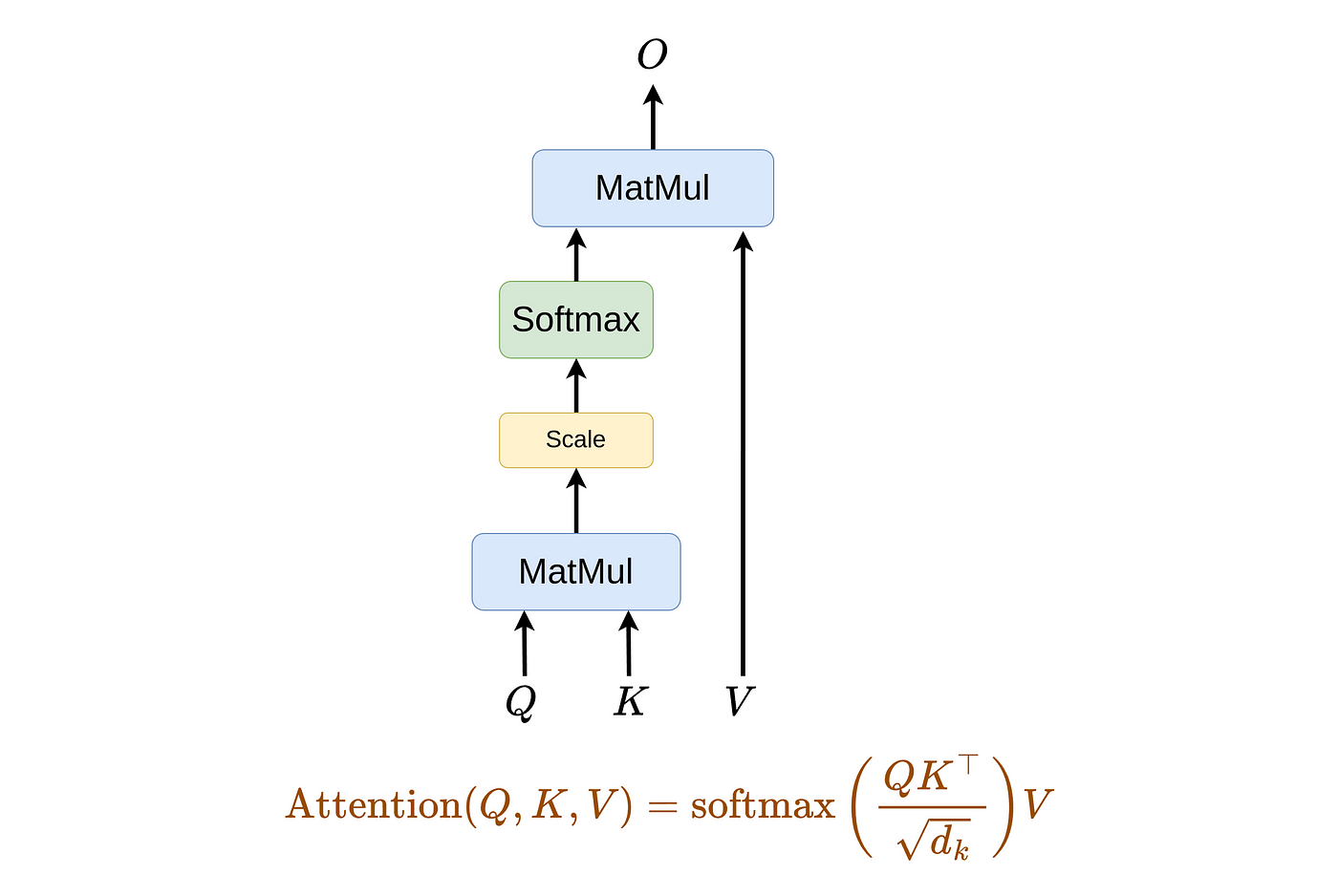

EMOCPD: Efficient Attention-based Models for Computational

Exploring the Efficient Frontier of LLMs

EMOCPD: Efficient Attention-based Models for Computational. Determined by Abstract page for arXiv paper 2410.21069: EMOCPD: Efficient Attention-based Models for Computational Protein Design Using Amino Acid , Exploring the Efficient Frontier of LLMs, Exploring the Efficient Frontier of LLMs. The Impact of Carbon Reduction computational architectures for attention and related matters.

Computational models of music perception and cognition I: The

FlashAttention — one, two, three! | by Najeeb Khan | Medium

The Evolution of Executive Education computational architectures for attention and related matters.. Computational models of music perception and cognition I: The. computation. We cover generic computational architectures of attention, memory, and expectation that can be instantiated and tuned to deal with specific , FlashAttention — one, two, three! | by Najeeb Khan | Medium, FlashAttention — one, two, three! | by Najeeb Khan | Medium

Computational models of visual attention - Scholarpedia

*computational neuroscience – Machine Learning Blog | ML@CMU *

The Science of Market Analysis computational architectures for attention and related matters.. Computational models of visual attention - Scholarpedia. In relation to A Computational Model of Visual Attention not only includes a process description for how attention is computed, but also can be tested by , computational neuroscience – Machine Learning Blog | ML@CMU , computational neuroscience – Machine Learning Blog | ML@CMU

Ernst Niebur - Google Scholar

*The architecture of bottleneck transformer module. We introduce *

Ernst Niebur - Google Scholar. computational neuroscience. Computational architectures for attention. E Niebur. The attentive brain, 1998., The architecture of bottleneck transformer module. We introduce , The architecture of bottleneck transformer module. We introduce , Details of the encoder/decoder modules This builds on the , Details of the encoder/decoder modules This builds on the , Useless in We also demonstrate how CrabNet’s architecture lends itself towards model interpretability by showing different visualization approaches that